There’s a misconception that I’ve encountered among our research teams lately.

The idea is that the distance between the page being split tested and a specified conversion point may be too great to attribute the conversion rate impact to the change made in the test treatment.

An example of this idea is that, when testing on the homepage, using the sale as the conversion or primary success metric is unreliable because the homepage is too far from the sale and too dependent on the performance of the pages or steps between the test and the conversion point.

This is only partially true, depending on the state of the funnel.

Theoretically, if traffic is randomly sampled between the control and treatment with all remaining aspects of the funnel consistent between the two, we can attribute any significant difference in performance to the changes made to the treatment, regardless of the number of steps between the test and the conversion point.

More often than not, however, practitioners do not take the steps necessary to ensure proper controlling of the experiment. This can lead to other departments launching new promotions and testing other channels or parts of the site simultaneously, leading to unclear, mixed results.

So I wanted to share a few quick tips for controlling your testing:

Tip #1. Run one test at a time

Running multiple split tests in a single funnel results in a critical validity threat that prevents us from evaluating test performance because the funnel is uncontrolled and prospects may have entered a combination of split tests.

Employing a unified testing queue or schedule may provide transparency across multiple departments and prevent prospects from entering multiple split tests within the same funnel.

Tip #2. Choose the right time to launch a test

External factors such as advertising campaigns and market changes can impact the reliability or predictability of your results. Launching a test during a promotion or holiday season, for example, may bias prospects toward a treatment that may not be relevant during “normal” times.

Being aware of upcoming promotions or marketing campaigns as well as having an understanding of yearly seasonality trends may help indicate the ideal times to launch a test.

Diminishing impact

One thing worth keeping in mind is that the measurability of impact will often diminish with increased distance between the test and the conversion point.

For example, it is more likely to reach a statistically significant difference during a homepage test when comparing the clickthrough rate to an immediate next step as opposed to comparing conversion rate for a sale occurring six steps deeper into the funnel.

This is often due to the natural decrease in sample size at each step as prospects drop out of the funnel along the way. There’s also the possibility that the change made in the treatment loses psychological effect as prospects progress into the funnel.

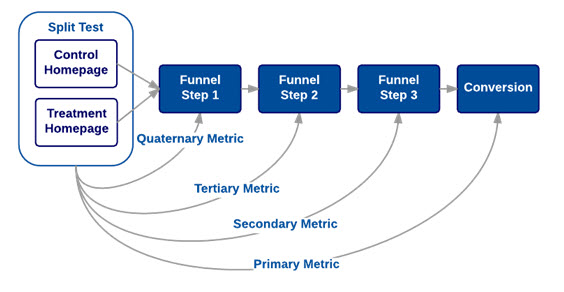

A hierarchy of metrics

With diminishing impact in mind, we can’t evaluate the success of a test based on the conversion rate if the sample size is too low at the conversion point to yield a statistically significant difference. We also shouldn’t evaluate the success of a test based exclusively on clickthrough rate to the next step due to the dynamic of quality vs. quantity of traffic.

If we increase clickthrough rate to the next step but there is no significant difference in conversion rate, we may have only pushed more unqualified traffic to the next step without actually optimizing the process or clarifying the value proposition.

This is why, in order to fully understand the impact of a test, we often use a hierarchy of metrics in which we measure the success of a test based on the furthest point in the funnel we were able to significantly impact.

This also allows us to understand that, through we may have failed to reach a significant difference in conversion rate, perhaps we did achieve a significant lift in add-to-cart rate (percentage of prospects who added a product to their cart).

In this case, we can say that the variable(s) manipulated in the homepage treatment produced a significant increase in add-to-cart rate and, for all intents and purposes, outperformed the control. Should we have insignificant differences for conversion rate and add-to-cart rate, we should consider the next furthest point in the funnel as the next success metric — perhaps the percentage of visitors that reach the product page.

In the end, no conversion metric is too distant from the test for attribution. The reality, however, is that testing can get messy. This is why it’s important to take the time to properly control our experiments, consider which metrics need to be evaluated and establish a clear understanding of the conditions for success.

You might also like

Homepage Optimization Applied: Get ideas for your next homepage test [More from the blogs]

Website Optimization: Testing your navigation [More from the blogs]

Website Optimization: Not testing can cost you money [More from the blogs]

Website Optimization: Testing program leads to 638% increase in new accounts [MarketingSherpa case study]

Love the blog! You have a lot of great information for anyone who may just be starting out.

Expertly articulated, Kyle! Great post!