Some marketers simply drop two landing pages into a split test tool, click a button, and then push live the page “winner” with the larger results number.

If you really want to benefit from split testing, you need to do a little more. You need a basic understanding of what you’re really doing when you’re testing. That includes validity, which is, at its most basic level, an assurance that the results from your tests actually reflect what is going on in the real world.

Let me show you an experiment with a MECLABS Research Partner in which an understanding of validity helped the team find a conversion lift that would have otherwise been missed.

Background: Consumer company that offers online brokerage services

Goal: To increase the volume of accounts created online

Primary research question: Which page design will generate the highest rate of conversion?

Test Design: A/B/C/D multi-factor split test

CONTROL

The control had heavily competing imagery and messages, as well as multiple calls-to-action. (Please Note: All creative samples have been anoymized.)

TREATMENT #1

Most of the elements on the page are unchanged – only one block of information has been optimized.

In that block of copy, the team added a headline and bulleted copy highlighting key value proposition points.

The “Chat with a Live Agent” call-to-action was removed.

And, a large, clear call-to-action was added.

TREATMENT #2

In Treatment #2, the left column remained the same, but the team removed footer elements.

This treatment features long copy with a vertical flow. Awards and testimonials were added to the right-hand column.

A large, clear call-to-action similar to Treatment #1 is at the bottom.

TREATMENT #3

Treatment #3 is similar to Treatment #2, except the left-hand column width was reduced even further. In this treatment, the left-hand column has a more navigational role.

However, similar to Treatment #2, there is still a long copy, vertical flow, single call-to-action design.

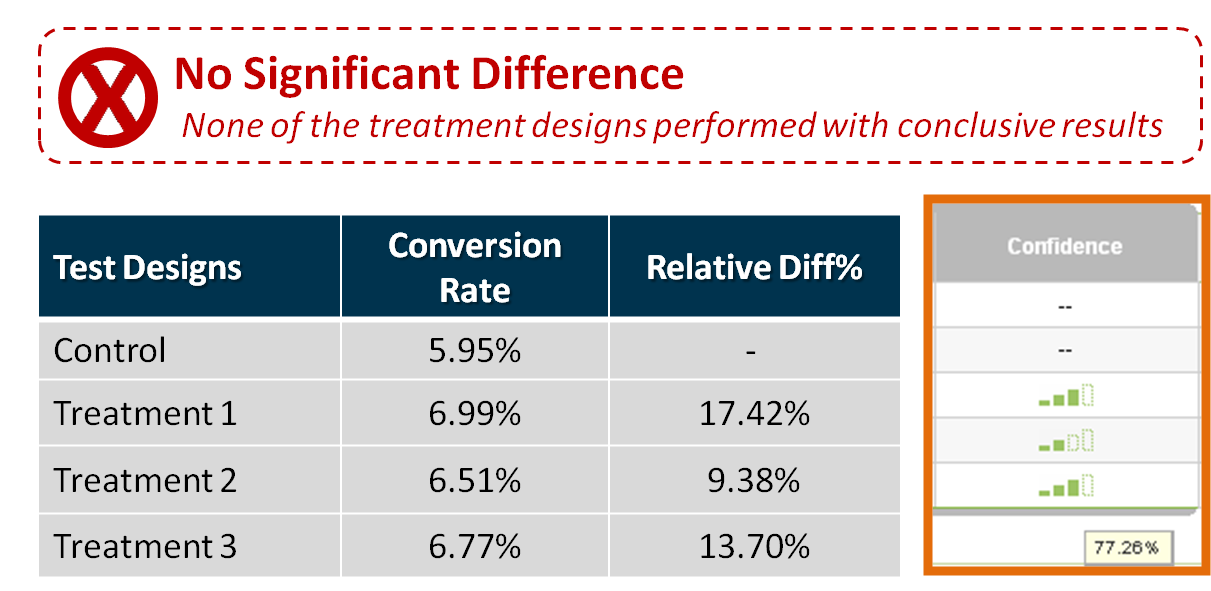

Results

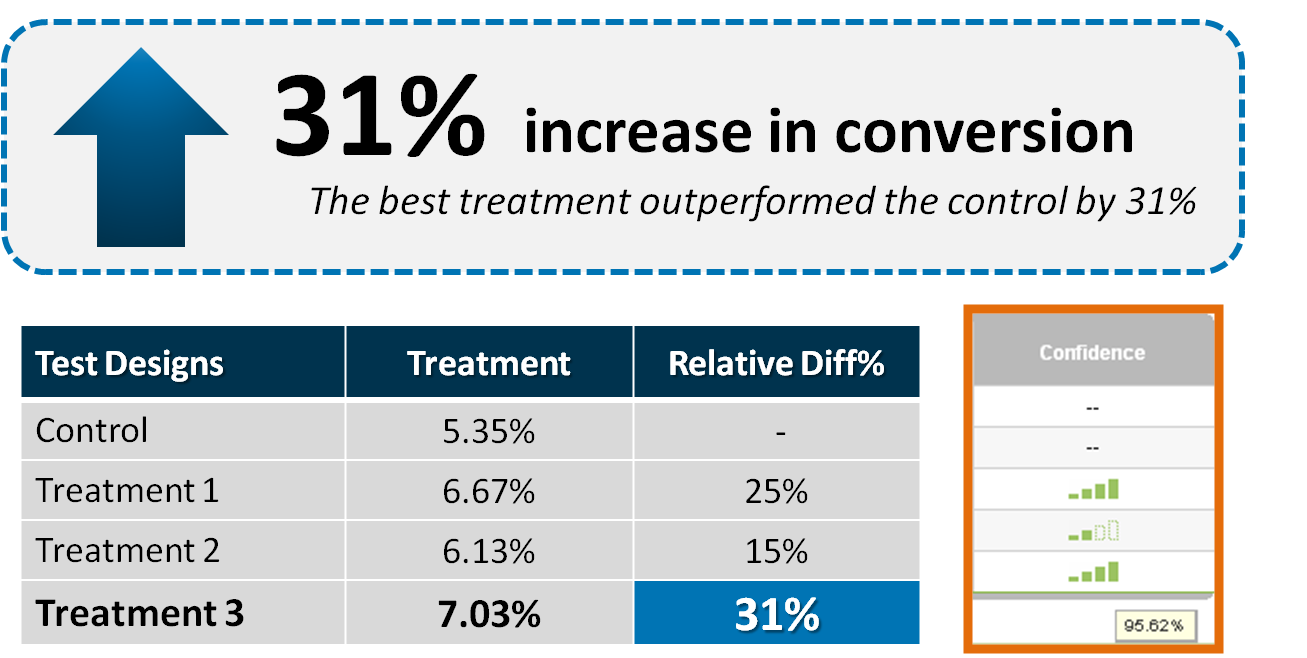

According to the testing platform the MECLABS team was using, the aggregate results came up inconclusive. None of the treatments outperformed the control with any significant difference at a 95% level of confidence.

Experiment validity threat

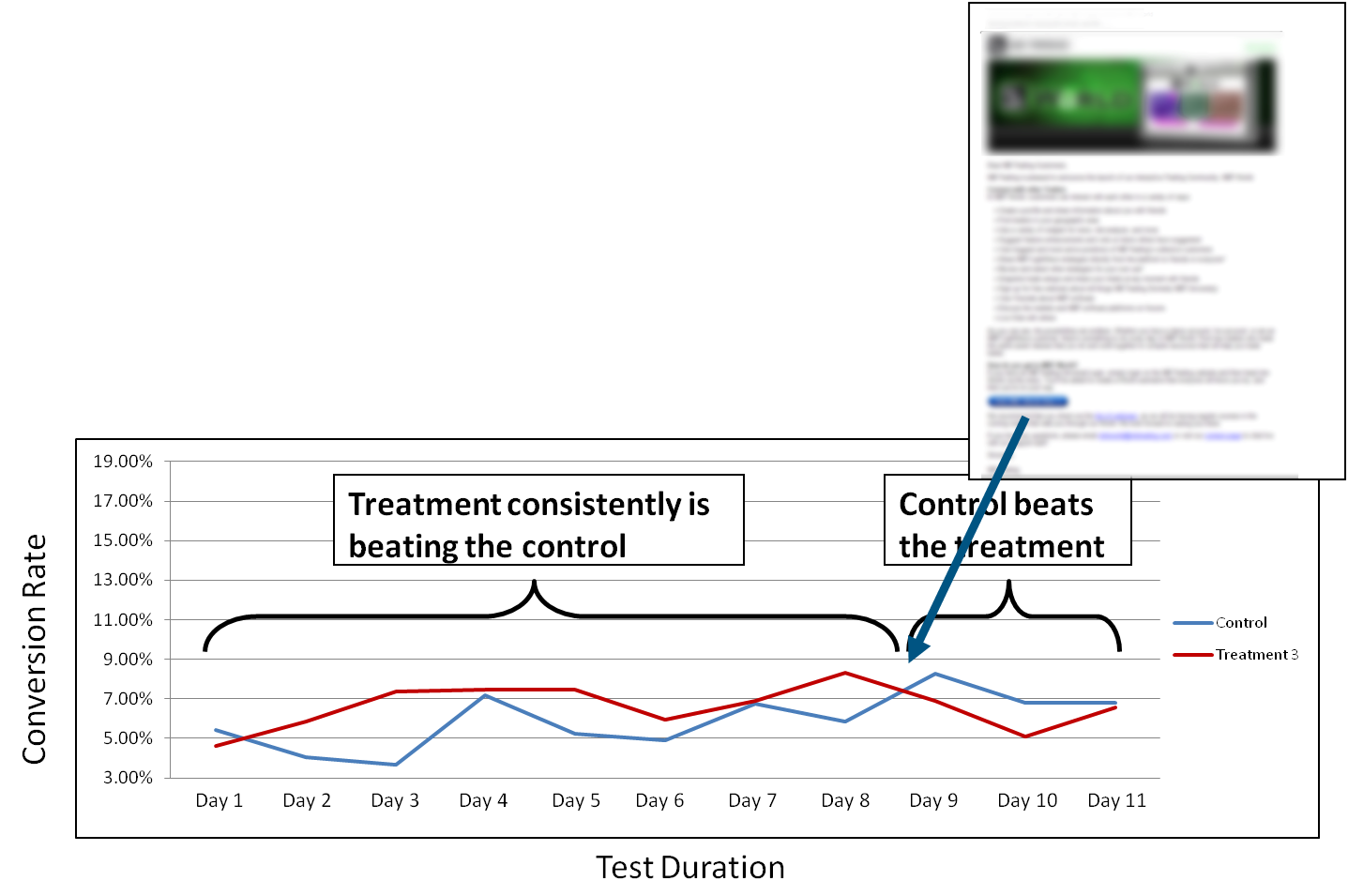

However, the team noticed an interesting performance shift in the control and treatments toward the end of the test.

They discovered an email sent during the test skewed the sampling distribution.

What they were able to learn from this test

After excluding the data collected after the email had been sent out, each of the treatments substantially outperformed the control with conclusive validity.

You can’t always overcome a validity threat in this manner. But, by having a better understanding of validity, you can determine whether the results you’re seeing are likely giving you intelligence about the customer that you can use to help improve your marketing efforts.

You can learn more about validity by using this free resource – Bad Data: The 3 validity threats that make your tests look conclusive (when they are deeply flawed).

Related Resources:

Webinar Testing: Slight title change produces 45% increase in clickthrough rate

Online Marketing Tests: A data analyst’s view of balancing risk and reward

Customer Theory: How we learned from a previous test to drive a 40% increase in CTR

Social Media Microsite Test: 3 lessons based on a 154% increase in leads