“What is the most effective copy for my buttons?” That is one of the most frequent questions we receive at MarketingExperiments. And for good reason. As our testing shows, simple changes to the copy on your call-to-action buttons can generate impressive results.

Yet even though we know that, we don’t always optimize our button copy. And in this case, I’m not using the royal “we” to try to refer to all marketers. Right here on the MarketingExperiments blog, where all we do is write about testing, optimization, and messaging, we had an unoptimized button.

But, fortunately, we also have an audience of savvy optimizers that constantly keeps us on our toes. In fact, on a recent post – Live Experiment (Part 1): How many marketers does it take to optimize a webpage? – Thomas Strunk made a comment on the post that rightly called us to task…

Also just another thing I just noticed is that your little green button below says “Submit Comment” and I thought that the word “Submit” was a BAD word 🙂 Maybe you could do an A/B test on it with a button that says “Share Your Thoughts” or something like that… Who knows you might get more responses to your posts?

Good point, Thomas. To the splitter!

CONTROL

The control button was something you’ve seen a million times before, and probably the most popular button on the Internet – the Submit button. Or more specifically, “Submit Comment.”

![]()

As we’ve found in test after test, the default “Submit” button tends to underperform. So we wanted to create a treatment for this blog, as well at the MarketingSherpa blog, that better implied the value of commenting.

TREATMENT

Frankly, this was easier said than done. One of the biggest points of value many people see in commenting on a blog is getting a link back to their site. Our audience is made up of marketers and, frankly, I think marketers are even worse about this than the average Joe.

However, spam comments that say “great post” add no real value for you, the reader. That’s why we delete many of the comments we get on our blogs. If it doesn’t add value to the reader, it doesn’t make the cut.

So we certainly didn’t want a button that played up value some in our audience would like, but that we don’t want to give them at the expense of the majority of our audience.

So which words accurately describe the value you can expect to receive from commenting on our blogs?

As Senior Editorial Analyst, Austin McCraw, replied to Thomas…

“‘Share your thoughts’ is better, but still seems like it is too ‘action-centric’ rather than ‘value-centric.’ What does the reader get from sharing their thoughts? Why did you comment? What was in it for you?”

Even Thomas was perplexed…

“I am not really sure what was in it for me? I guess I am just thankful for all the info you guys let us in on and I know that it is nice to get feedback on all your hard work. I am anxious to see what a ‘value-centric’ wording will be on a ‘submit your comment’ button. Because I could totally use something better for my site too. Looking forward to see what you come up with.”

Frankly, it took a little soul searching on our part. Why do we even want you to comment in the first place?

And then it hit me. It goes back to the line I use to sign off on every issue of the MarketingExperiments Journal that we send, “Our job is to help you do your job better. Let us know how we can help.”

We’re optimizers. Frankly, we’re constantly trying to make everything we do better. And that’s where your feedback, from a community of professional marketers, is so helpful to us.

But to help you do your job better, we also want you to interact with each other. Sometimes you can learn more from your peers than you can from us. It’s why we encourage you to tweet on #webclinic during Web clinics. It’s why we invite you to present your case studies to your peers at marketing Summits.

Essentially, we want you to “Join the conversation.” Yes, I know there is nothing shockingly new about that phrase. But, it just seemed to fit the bill…

![]() RESULTS

RESULTS

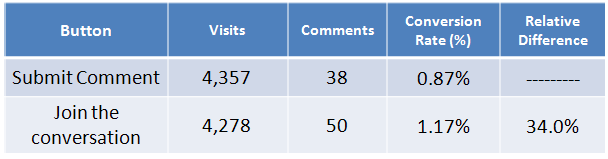

We found a significant difference on the MarketingExperiments blog at an 82.9% level of confidence, a 34.0% lift to be exact. The results are above.

We did not find a significant difference on the MarketingSherpa blog at even an 80% level of confidence.

What can you learn from this test?

- At a very base level, it’s worth testing your buttons. Compared to, say, an entire shopping cart process, it’s an easy test to run. Yet, because it’s so close to the point of conversion, it may have a significant impact. After all, think about all of the money, time, and resources you invest driving people to that button.

- Think about the messaging on your buttons. Every action you expect your audience to take should have a value. While, admittedly, there is only a small value in joining a conversation for some (and perhaps no value for many), there is certainly more value than simply saying, “Submit Comment.” Besides, what are you really telling your audience when you ask them to submit? What is their expected response? As Flint McGlaughlin, Managing Director, MarketingExperiments likes to say, “I SUBMIT TO THE GODS OF MARKETING!”

- No significant difference is valuable. As you can see at the bottom of this blog post, we changed the button to “Join the conversation” on our blogs even though we did not find a significant difference at the 95% level of confidence (the level we usually look require to make a business decision).

That no significant difference finding is valuable. “Join the conversation” better ties into the message we try to send our audience, but we didn’t want to hurt conversion. Knowing that the more consistent messaging will not hurt conversion gave us the confidence to make the change.

Related Resources

Email Marketing Optimization: How you can create a testing environment to improve your email results

Marketing Testing and Optimization: How to begin testing and drive towards triple-digit ROI gains

Fundamentals of Online Testing: Online testing course

Despite you usually delete all comments with ‘great post’, I will give a try. 🙂 I would also prefer ‘join the conversation’ to ‘submit a comment’, because’, the second phrase is really faceless and widely spread all over the web. BUT, as I am writing now I see a very cool ‘Join the conversation’, while as I am the first to comment and no conversation is taking place so far. Thus, I suggest to change the call to action to ‘Start a conversation if is there no comments yet. Then leave ‘Join the conversation for the second person to comment. 🙂 What do you think?

Helen,

That’s why I love writing on this blog. We have such a crackerjack audience.

That is a fantastic idea, Helen. I just grabbed our IT wiz, Steve Beger, while he was walking by my office with his morning coffee. (I was so emphatic he thought the site must have crashed). We’ll be implementing your idea on the blog posthaste, Helen.

It is my pleasure to make a contribution, Daneil. Only today I have subscribed to your RSS and now I see I will be a regular reader of your blog. It is nice to know people blogging listen to their readers. 🙂

Mr. Burstein,

I was unable to listen to the recent copywriting webinar in real time, so you can imagine my excitement when I saw the replay link in email, this morning. However, when I clicked the link, I was forwarded to a page with nothing on it.

Anthony,

Sorry about that. This is, occasionally, the price of constant optimizing. We were optimizing the MarketingExperiments homepage and accidentally knocked those pages offline momentarily. Ain’t technology, grand?

But they are back up now, and you can view the copywriting Web clinic replay at your convenience.

Great post on an important topic. I’d be interested in what words others have used to generate interesting responses from the blog readers.

Daniel,

Thanks so much for this post. Over the last year, I’ve had to project my time a little more aggressively. I took a second (and third) look at everything I was reading and watching and listening to, etc. Many voices got removed from ‘my list’, but not Marketing Experiments.

I LOVE your blog and articles and webinars for one main reason – they are based on real-world testing – not random theories. The solid optimizing tips and numbers-based proof you share with us is consistently the best I find anywhere and it’s always a valuable learning opportunity.

Thanks for the hard work you and your team do and for the outstanding material you share with us. It’s a HUGE value.

Hi Daniel,

Thanks for showing us the results of changing the wording on the comment button! I am glad I brought this idea up to you 😉 This will be very helpful to all of us.

Thanks Helen for that great idea too with changing the 1st word to “start” good thinking!

Thanks a lot for all your articles about ways to improve our performances.

I’m not a native speaker, does it make sense to change your wording to “Enrich the experiments !”.

Something that is telling to readers that their opinion, their comments will really help others and should release added value.

I like the topic, and I think that this result could turn out to be a statistically-significant difference in the long run. I tend to be skeptical about results when I have less than 100 events in both conditions of an A/B split test. When I run a Z approximation to test the significance of the difference between the two proportions in the test you mentioned, I get a Z value = 1.372, which puts me well under statistical confidence for a two-tailed test and somewhat under statistical confidence for a one-tailed test. What have you found to be useful when making a call under conditions when statistical confidence is below the typical 95%?

Hi Greg, thanks for your comment. I discussed with Bob Kemper, the Senior Director of Sciences here at MECLABS. I needed his help because I was in a bit of a quandary. You see, I know for a fact that I’m never wrong. That’s just a given. However, the empirical evidence you provided conflicted with my view of the world (re:never being wrong).

In the end, you’ve made an excellent point, and I’m encouraged (and grateful) that both you and Bob ‘checked my math’—particularly given that in this case, I ‘fat-fingered’ some data entry into my stats tool and listed a couple of erroneous numbers (an innate risk with the blog, as these posts aren’t subjected to the pre-pub figure-checks that the research pubs are). A couple of related observations:

• First, to the errors in the prior version of the post (I’m updating it now with the right figures so subsequent readers don’t have to suffer the same confusion you rightly did, but listing the corrections below for reference…

o The Conversion rate for the Control button (“Submit Comment”) was listed as “0.84%” in the original post and has been corrected to “0.87%” (i.e. 4,357 / 38 * 100).

o The Relative Difference between the conversion rates was listed as “39.55%” in the original post and has been corrected to “34.0%”.

o The Level of Confidence (i.e. one minus the statistical level of significance) was listed as “88.03%” in the original post and has been corrected to “82.9%”, using the assumption of a Normal distribution.

• Then, your approximation of the Z-score statistic is a good one. I don’t know if it’s from a Stats book table, but the figure I get using Excel’s Inverse Normal Distribution function is 1.3704 “Z’s”.

• In this kind of binomial experimentation, the tests are innately two-tailed.

• At more than 8000 visits and more than 80 successes, with relative treatment effect of greater than 30%, it’s not an irresponsible decision to use small sample statistics to evaluate the results. Provided your testing tools’ sampling methods and your web metrics systems are sound, the presumption of a Normal distribution should be entirely rational and reasonable.

So, even though the actual level of confidence for the test to-date was only 83% that the new “Join the Conversation” button inspires more visitors to comment than did the “Submit Comment” version, it’s still a comfort to be able to change the button copy to one that is aesthetically more favorable with a reasonably high level of confidence that at least it won’t hurt the performance of the page.

Thanks once again for “Joining the Conversation”, Greg, and sharing your ideas. And giving verifiable prove why we should all (me particular) seek to have a little less self confidence that we know we’ll be right in picking a winner in a marketing test, and rely a little more on double checking and verifying the statistical confidence.

All the best,

Daniel

@Daniel Burstein

Daniel,

Thanks for your reply. I am interested in a couple of things.

First, I am interested your comment about using two-tailed tests to determine winners in your two-variable tests. I typically do this opposite–I try to get the stakeholders to agree on a hypothesized outcome so I can create one-tailed tests. For lack of a better metaphor, I think of statistical analysis somewhat like gambling–you have to place a bet if you want to have a chance to win.

For example, if I know that the design team will only change button text if we realize more conversions, I will set up a test that will run only until we get enough observations to demonstrate that one-sided win. In this case, the decision doesn’t hinge upon whether the control wins, so I don’t need the second tail. I think that there can be good learning if we find a high effect size in favor of the control, but I wouldn’t structure a two-tail test in this case.

Second, I would also like to understand how statistical tests can suggest that a new design will probably not lose to the control in the long run. I suppose we might be able to suggest that a new design is “greater than or equal to” a control if the effect size were low but not statistically significant. However, anytime effect size is high, I think it becomes riskier to assume that a treatment is at least as good as a control. For example, your test showed a high effect size that did not overcome an even higher amount of error (i.e., no statistical significance). In this case, I would want to obtain more observations to reduce my error levels.

(By the way–I personally like the “Join the Conversation” button better than “Submit”.)

Thanks for “joining the conversation” Greg,

You clearly have a deep and varied knowledge of statistics. While I certainly appreciate your help in correcting the error in our blog post and wanted to be clear about that in the previous comment, I think at this point the conversation is getting to a level of depth that isn’t practically helpful to the vast majority of our audience and I need to focus my time on serving that 99.9%.

However, I invite others of Greg’s professional peers to join the conversation as well and share their input, MECLABS certainly doesn’t have to dominate this conversation.

Thanks.

Daniel,

as I said in another post I found the “Join the conversation” button unintuitive, in that it didn’t seem to me to be related to publishing my comment.

In fact i couldn’t even see it.

But from you’re numbers, it’s obvious that my brain works in weird ways sometimes.

So here’s my take on why I couldn’t see it:

1: Perhaps the biggest problem is the use of dark coloured text on a dark background. In this case blue on green. The visual people should tell you that that creates poor contrast and these particular colours create an optical illusion of fuzzy text. So all together it just makes it hard to see and read.

2: To me “Join the conversation” seems like I’m signing up to something unrelated to the post. Maybe it’s a result of the above point, but it just seemed like a separate item.

3: Rightly or wrongly we’ve all been conditioned to look for Submit or Publish so maybe I’ve just been brainwashed too much.

4: And that gets back to the “value” we’ll all get from pushing the button. I’m not so much looking for value as wanting to complete an action. And I’m looking for a button that will tell me that when I push it my action or intent will be successfully completed.

I realise were getting into semantics and fine detail here, but for me at least “Join the conversation” doesn’t match with my intent.

Anyway, food for thought or more tests.

I like “Join the conversation”, but it’d be fun to experiment with other phrases. Perhaps “What do you know about the subject”. I guess that’s a bit long, but I wonder with the right formatting if you could make a longer phrase still resemble the typical “submit” button. Draw people to action with a longer saying. I do see the importance of familiarity however. I sometimes don’t want to spend days searching for the call to action.

thanks for share this helpful article Your site is the best